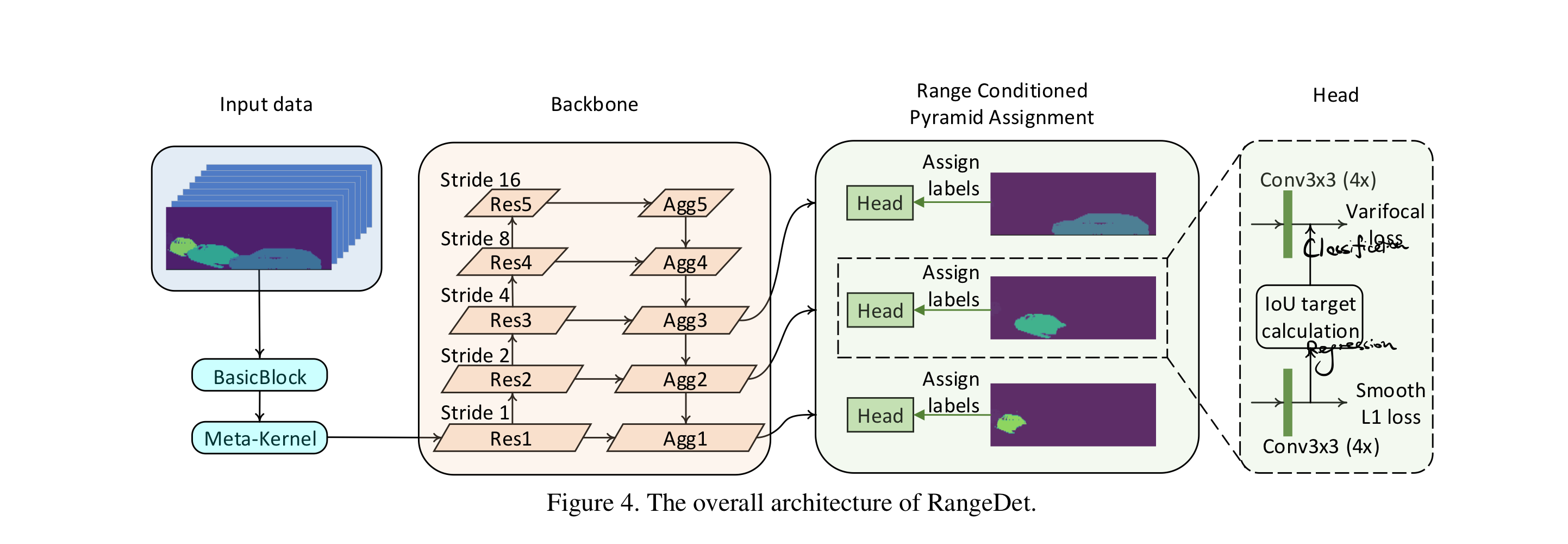

Full range view detection.

Motivation

Three problems with range view:

- Scale Variation between nearby and far away objects

- FPN to rescue. Associate label with prediction by range.

- The paper call it Range Conditioned Pyramid (RCP)

- Convolution is done in 2D range image, but the output we want is in 3D space.

- Meta kernel that takes 3D Cartesian information in too (key important bit)

- Utilize compact 2D range view

- Weighted Non-maximum suppression (not so interesting)

Deep Dive

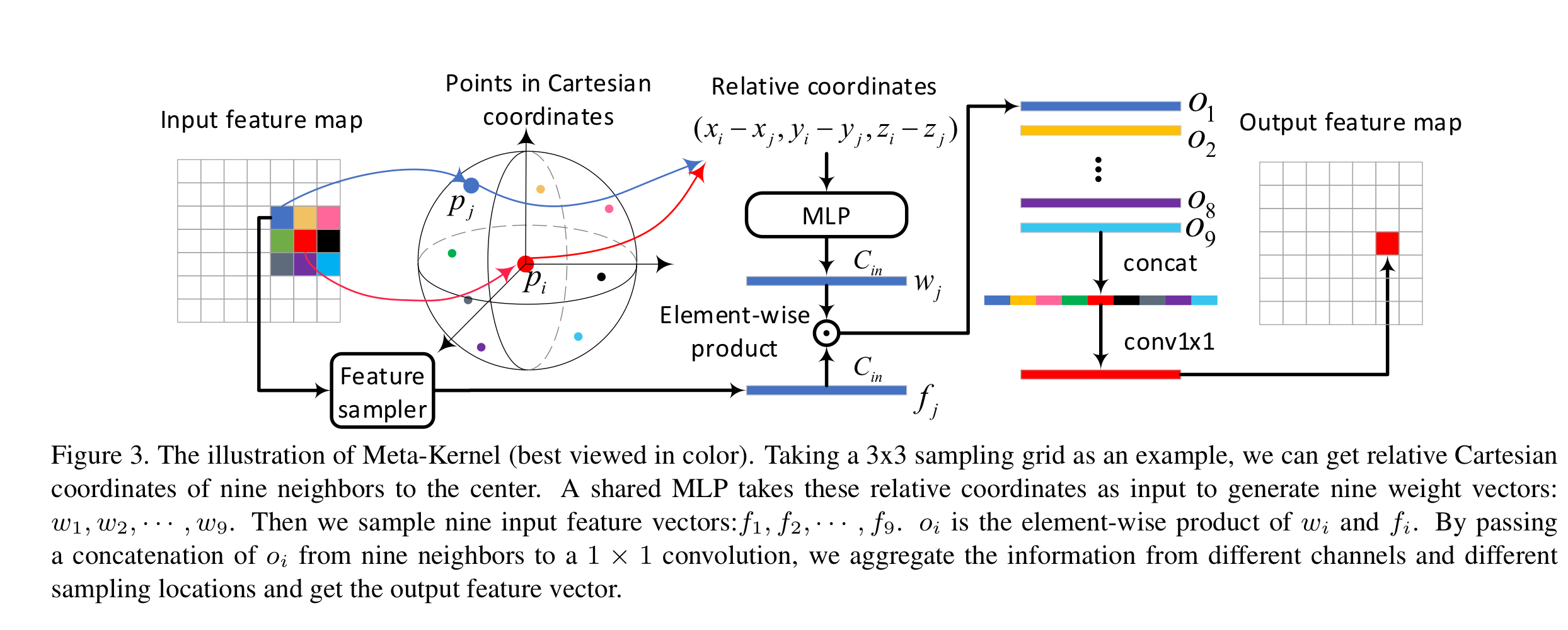

Meta Kernel: TL;DR:

I want to put Cartesian info into feature, but that’s hard. So I put that into kernel weight. That’s too expensive, so I steal some idea from MobileNet.

Let’s recall a normal CNN kernel, which is of size . The weight sharing mechanism means if a kernel is of , then it just has 9 unique value per input channel. See here for a quick review of how normal CNN work:

CS231n Convolutional Neural Networks for Visual Recognition

Table of Contents: Convolutional Neural Networks are very similar to ordinary Neural Networks from the previous chapter: they are made up of neurons that have learnable weights and biases.

https://cs231n.github.io/convolutional-networks/

As we want to take Cartesian information in convolution, it need to be part of the feature. We can simply incorporate that into kernel weight. Thus, we need to relax the weight sharing, since the weight should change based on the Cartesian coordinates. The actual weight is calculated by a MLP.

Notice the strange element wise product there. This is because it use depth-wise convolution here, similar as MobileNet. By first doing per-channel convolution, then using convolution to expand to output channel, it saves computation power.