This is quite a wordy paper. LLM interference has a prefill and a decoding stage. Their characteristic is different. If we put them on different GPU cluster with auto-scaling algorithm, things run faster.

The prefill step deals with a new sequence, often comprising many tokens, and processes these tokens concurrently. Unlike prefill, each decoding step only processes one new token generated by the previous step. This leads to significant computational differences between the two phases. When dealing with user prompts that are not brief, the prefill step tends to be compute-bound. For instance, for a 13B LLM, computing the prefill of a 512-token sequence makes an A100 near compute-bound (see §3.1). In contrast, despite processing only one new token per step, the decoding phase incurs a similar level of I/O to the prefill phase, making it constrained by the GPU’s memory bandwidth

Here it’s mentioned that the decoding step only looks at the new token per step since there is KV cache. This needs to be shared in both step. So there need to be a copy process to update the cache from prefill step to decoding step.

Things are also complicated when the model is too large to fit into a single GPU. We can do this by model parallellism. We can partition the big model by computationally intensive operations (infra-operator), or by stage (inter-operator). They have different compute / memory performance behavior.

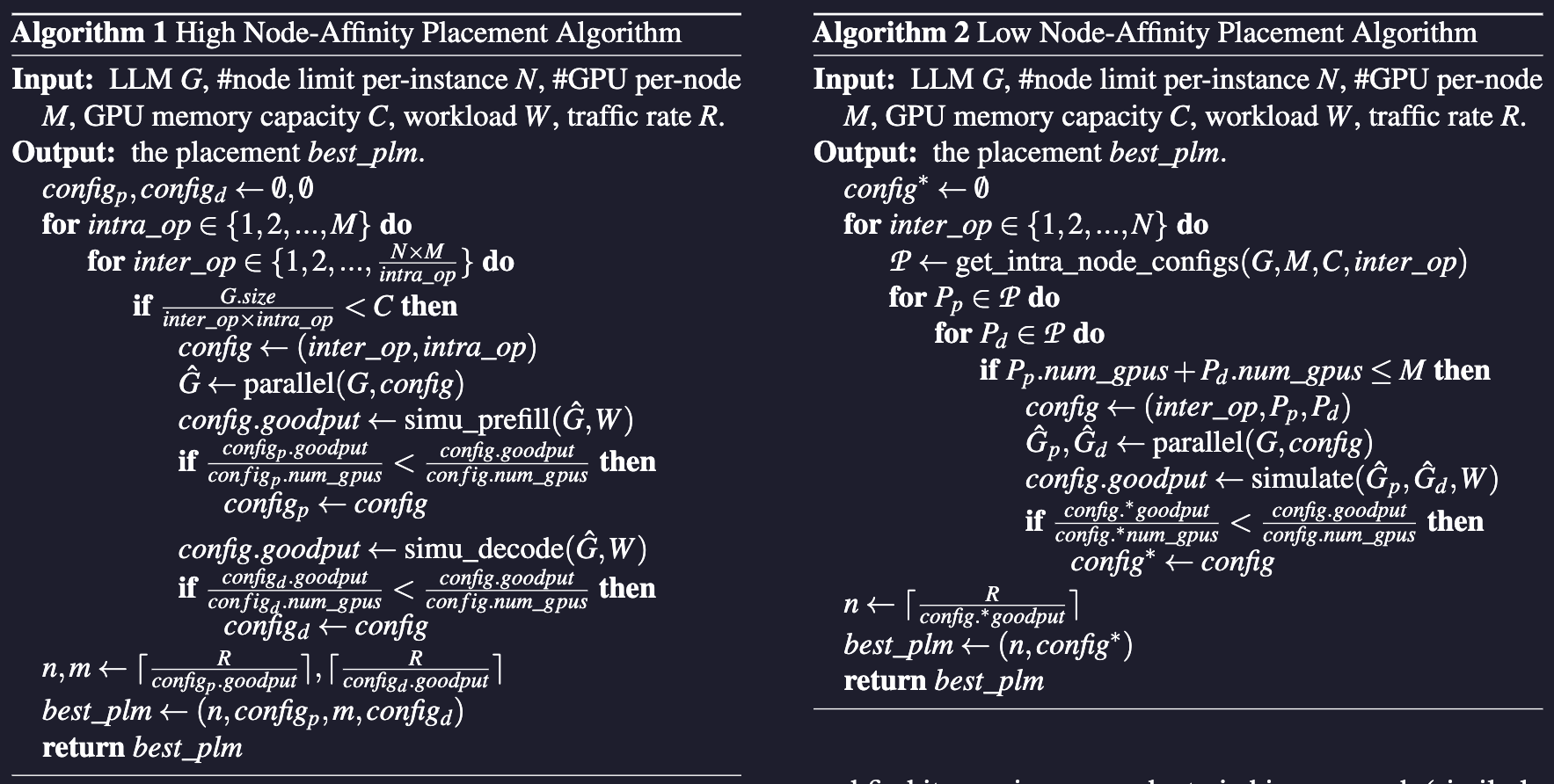

This is very simple algorithm:

- Grid search the parallellism options, use the simulator to find the best option, and then use it.